Are you looking for APIs to extract data? Use these softwares for web scraping

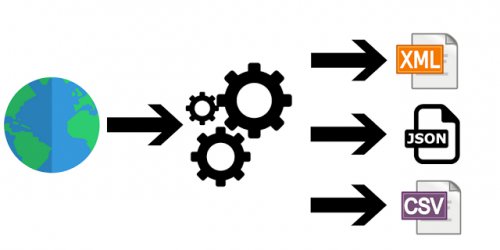

To start with, web scraping is the automation of the “copy & paste” method. A web browser is the only way to see data compiled on websites. The majority of them will not allow you to save or download this data. If you require the information, you must manually copy and paste it. And, as we all know, doing so on daily basis can be stressful. Web scraping is a means of automating this process so that the Web Scraping software may get data from websites in a fraction of the time it takes to manually obtain data from websites.

An application programming interface, or API, allows businesses to extend the functionality of their programs to third-party developers, commercial partners, and internal departments. Services and products can communicate with one another and benefit from one another’s data and capabilities thanks to an established interface. In the last decade, APIs have grown in popularity to the point that many of today’s most popular internet programs would be difficult to construct without them.

To select the right API for your business, you need to analyze its main features. There are many interesting web scraping tools available in the market. However, we think you should use these softwares for web scraping:

1. Codery

The Codery API crawls a website and extracts all of its structured data. You only need to provide the URL and they will take care of the rest. In the form of an auto-filling spreadsheet, extract specific data from any webpage. Moreover, this API has millions of reliable proxies available to acquire information required without fear of being blocked.

Using Codery, with a single request, the scale search engine crawls pages. To manage all types of websites, use a real browser to scrape and handle all of the javascript that runs on the page. Finally, Codery has a variety of prices, with blocking Images and CSS from websites included.

2. Browse AI

Browse AI is an API for web scraping that allows you to extract specific data from any website in the form of a spreadsheet that fills itself. Moreover, this platform has the possibility of monitoring and getting notified of changes.

Browse 1-click automation for popular use cases is another of the features Browse AI has to offer. Used by more than 2500 individuals and companies, it has flexible pricing and geolocation-based data.

3. Page2API

Page2API is a versatile API that offers you a variety of facilities and features. Firstly, you can scrape web pages and convert HTML into a well-organized JSON structure. Moreover, you can launch long-running scraping sessions in the background and receive the obtained data via a webhook (callback URL).

Page2API presents a custom scenario, where you can build a set of instructions that will wait for specific elements, execute javascript, handle pagination, and much more. For hard-to-scrape websites, they offer the possibility to use Premium (Residential) Proxies, located in 138 countries around the world.