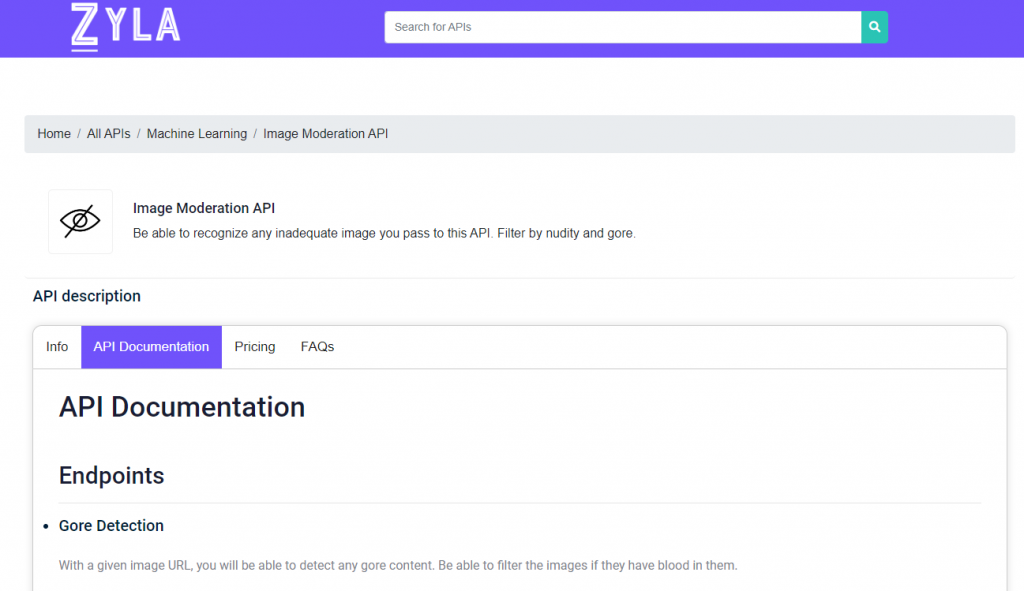

If you’re looking for an image moderator API that can help you keep your sites and applications free from inappropriate content, then you should try the Image Moderation API. This API is the smartest and most effective image moderator available on the web, and it can help you keep your content clean and safe for all users.

Also, Image Moderation API is easy to use and integrates seamlessly with your existing systems. Simply send your images and the API will moderate them for you. It will send you back a report of any inappropriate content that finds, and you can then take whatever action you deem necessary.

So why wait? Try the Image Moderation API today and see for yourself how it can help you keep your content clean and safe.

Most common uses of image moderation APIs

Image moderation APIs are becoming increasingly popular; as they offer a quick and easy way to ensure that images are suitable for public viewing. There are a number of different ways that you can use image moderation APIs, some of the most common uses are here below.

- Ensure that images uploaded to a website are appropriate for public viewing. This can be especially important for websites that allow users to upload their own images, such as social media sites.

- Help identify and remove offensive or inappropriate images from a website. This can be important for sites that want to maintain a clean and family-friendly image.

- To automatically tag images with appropriate keywords for easier search and retrieval. This can be useful for websites that have a lot of images, such as online stores.

- Help protect users from being exposed to abusive comments.

How do image moderation APIs work?

Image moderation APIs are a type of AI that can automatically detect and moderator images for content that is inappropriate or illegal. This can be everything from nudity and violence to hate speech and graphic content. There are many companies that offer these services, and they all work in slightly different ways.

Typically, an image moderation API will use a combination of machine learning and human reviewers to moderate images. The machine learning part of the process is used to automatically detect images that may be inappropriate. This is usually done by looking for certain patterns or keywords in the image. For example, an image that contains a lot of skin tones is likely to be a nude image. Once the system detects an image as potentially inappropriate, then sends it to a human reviewer for further review.

Image Moderation API: the smartest content moderator AI system

If you’re looking for a way to keep your website or app content clean and user-friendly, Image Moderation API is a great solution. Content moderation AI is a powerful tool that can help you automatically remove offensive or inappropriate content from your site. And Image Moderation API is the smartest and most effective content moderation AI system on the market.

So, here’s how it works: Image Moderation API uses machine learning to automatically identify and flag offensive or inappropriate images. This means that it will constantly monitor your content. So, you can be sure that your website only has the cleanest and most user-friendly content.

Therefore, using Image Moderation API is the best option if you want to maintain your material user-friendly and tidy.

If you found this post interesting and want to know more; continue reading at https://www.thestartupfounder.com/try-out-this-drugs-detection-api-in-2023/