If you’re looking for a quick and easy way to moderate content from images, an API is a great option. An API (Application Programming Interface) is a set of rules that allow software programs to communicate with each other. An API can be used to moderate content from images by providing a set of guidelines for how the content should be displayed.

There are a few different ways to moderate content from images with an API. One way is to use an API to automatically flag certain types of content, such as nudity or violence. Another way is to use an API to review content manually before it is published. This can be a time-consuming process, but it can be worth it to ensure that only the best content is published.

No matter which method you choose, an API can be a helpful tool for moderating content from images.

What is image moderation?

Image moderation is the process of inspecting images for offensive or inappropriate content. This can be done manually by humans or by using artificial intelligence (AI) algorithms. Image moderation is often used to protect users from seeing harmful or offensive content, such as nudity, violence, or hate speech.

While image moderation can be a powerful tool for keeping users safe, it’s important to remember that it’s not perfect. AI algorithms can make mistakes, and humans can also miss things. So it’s important to have a system in place that can catch errors and take action accordingly.

Image moderation is an important part of keeping the internet safe for everyone. By working to remove offensive content, we can make the internet a more welcoming and enjoyable place for all.

How does content moderation from images work?

Content moderation is the process of reviewing and approving content for publication. It’s a necessary step in the content creation process, and it helps ensure that only high-quality, error-free content is published.

There are a few different ways to moderate content, but one of the most common is to use images. Images can be reviewed and approved for publication just like any other piece of content.

So how does content moderation from images work? Here’s a quick rundown:

-First, the image is uploaded to a content moderation platform.

-Next, a team of reviewers reviews the image and decides whether or not it meets the publication standards.

-If the image is approved, it is then published. If it is not approved, it is either rejected or sent back for revision.

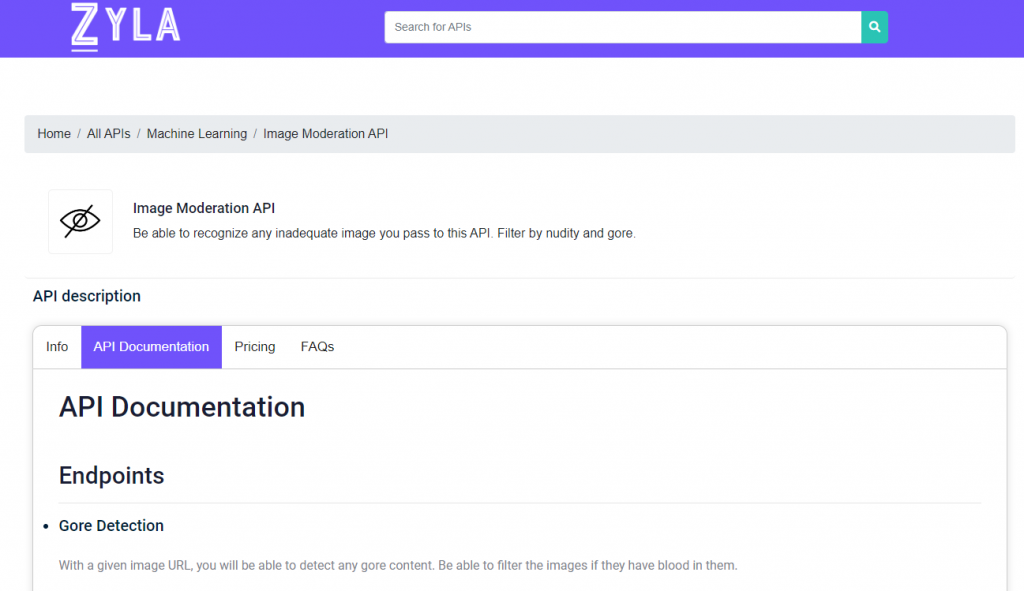

Another simple and faster way is to do it with an API, such as Image Moderation API.

The best tool to moderate images: Image Moderation API

If you’re looking for a tool to help you moderate images, look no further than the Image Moderation API. This handy API can help you automatically moderate images for things like nudity, violence, or hate speech. And it’s easy to use – simply send your images to the API and it will do the rest.

So why use the Image Moderation API? For one, it’s a lot faster and easier than manually moderating images yourself. It can also help you keep your content clean and safe for all audiences. So if you’re looking for a way to quickly and easily moderate images, the Image Moderation API is the perfect solution.

If you found this post interesting and want to know more, continue reading at https://www.thestartupfounder.com/try-out-this-drugs-detection-api-in-2023/