Have you ever heard of web scraping? If you do not dedicate yourself to data analysis, it is possible that you do not and that this term sounds like something to you in another language: web scraping tools. On more than one occasion you have had to complete an Excel list manually by copying and pasting the data from an online directory or web page. TRUE? It has happened to me hundreds of times before internalizing the tools that take advantage of Big Data. As you will have verified, there is nothing more tedious and boring than copying content to a database. Well, web scraping is a technique for the automated extraction of data from web pages. Keep reading All You Need To Know About The Automated Scraping API, we will tell you about Codery, an Automated Scraping API that will allow you to obtain all the data you need.

Web scraping tools are specially designed to extract information from websites automatically. They are also known as “scrapers”. These tools are very useful for anyone trying to collect data from a web page.

The most common or practical uses for which I have used it are the following:

- Extract contact data such as email

- Extract the titles and contents of a blog

- Create an RSS feed of the contents of a web page

- Follow the evolution of prices of different products

The main advantage of these tools is that they are easy to use and do not require extensive programming knowledge. It is important to point out that although web scraping techniques can provide great savings when it comes to obtaining data, they are partial applications that can in no case replace more advanced competitive intelligence or market analysis solutions.

When data requests are large-scale or too complex, web scraping tends to fail. If in your case you need advanced solutions, it is better to resort to DASS services that provide you with the data you need. You can search for this kind of alternative at https://zylalabs.com/api-marketplace

Importance of automating scraping

Modern businesses depend on data to function. Therefore, extracting information from the Internet is increasingly important for companies. However, if the source of the information is unstructured, extracting the data you need can be very difficult.

For example, you may need to extract information from the body of incoming emails, which do not have a predetermined structure. If you use traditional methods, web scraping may require the creation of custom processing and filtering algorithms for each site. You may also need additional scripts or additional tools to integrate the extracted information with the rest of the IT infrastructure.

Your employees are busy and don’t have time to deal with web scraping and data scraping. Any company that handles a large volume of information needs a comprehensive automation tool to bridge the gap between unstructured data and business applications.

Therefore, the best solution is to have software like Codery, specially designed for this task.

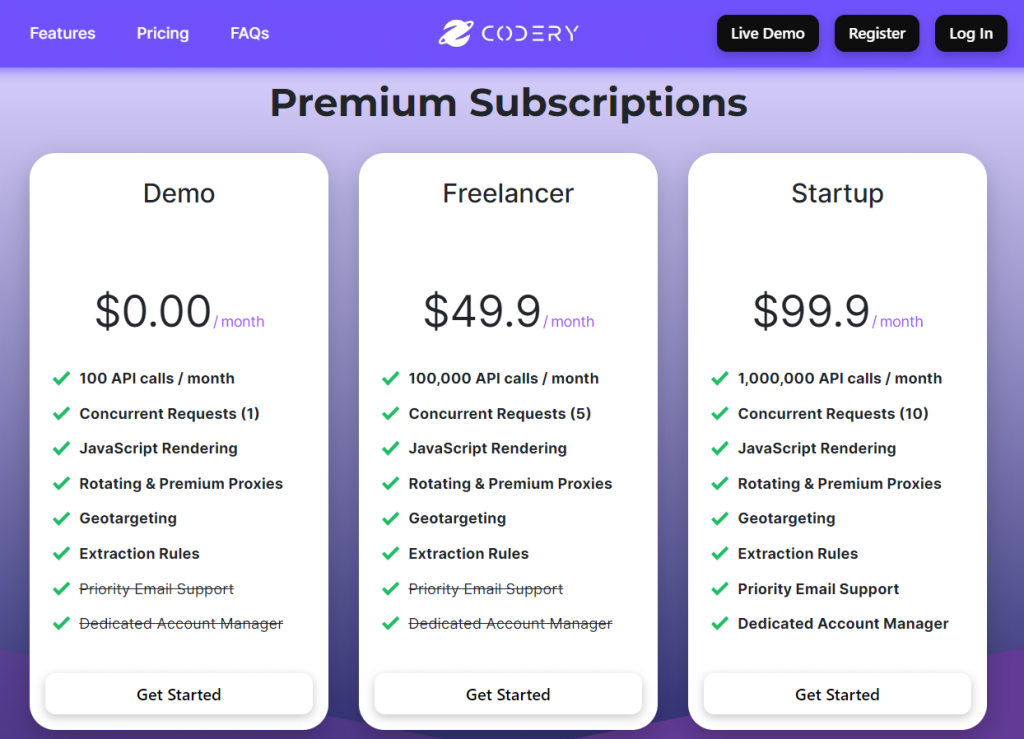

Codery: the best Automated Scraping API

The Codery API crawls a website and extracts all of its structured data. You only need to provide the URL and the API will take care of the rest. Using Codery, with a single request, the large-scale search engine crawls pages. To manage all types of websites, use a real browser to scrape and handle all of the javascript that runs on the page. As well, this API has millions of reliable proxies available to acquire information required without fear of being blocked.

Developers may use the API to extract the data they desire, either as a file to keep or to feed the information into various applications after they understand how it works.